Traditional, non-electronic music has a direct connection with the physical world. Since antiquity people have fashioned musical instruments out of nearly everything they could get their hands on. Percussive sounds were produced by striking animal hides, wood, metal, and even body parts. (My son drums on nearly every surface he comes in contact with.) Melodic instruments that could vary pitch enriched the musical palette -- tubes that you blew in or across, strings that you plucked or bowed, etc. Of course, the vibrating cords in the human vocal tract are a marvelous instrument in their own right. In each case, some act of performance, using materials from the natural world, creates sound waves.

The sounds of electronic music, on the other hand, are constructed synthetically by computer programs. There are many types of synthesis algorithms, some of which incorporate samples of real world sounds and some of which are entirely artificial, mathematical creations. Devices which are specifically constructed for this purpose are called synthesizers or tone generators, but modern PCs are also powerful enough to create synthesized sounds.

With non-electronic music, the act of performance and the production of sound are directly linked. With electronic music, a controller generates performance events in a language called MIDI (Musical Instrument Digital Interface. These events include information such as "MIDI note number 43 ON with velocity 99 on MIDI channel 1" and "MIDI note number 43 OFF on MIDI channel 1". These events are independent of the physical type of controller which may take the form of conventional instruments such as keyboards, guitars, violins and flutes or completely new kinds of devices that can be "played" to generate MIDI events.

The MIDI events are also decoupled from the process of tone generation. To actually make music, the stream of MIDI events must be routed to a tone generator running a synthesis algorithm. In common usage, the term synthesizer sometimes refers to devices which combine a controller and a tone generator; in such devices the MIDI events are transparently routed inside the device. It is also possible to have a separate controller connected with MIDI cables to computers, MIDI routers, or multiple tone generators. The tradeoffs are very similar to having separate Hi-Fi components vs. having a boombox with all of the functions integrated together. With separate components, you can upgrade and add components selectively. Having everything in one box gives you more convenience and portability with less set up.

A sequencer is a special kind of computer program that can record, manipulate and playback MIDI events. It is a MIDI version of audio recording and editing programs. In fact, many sequencers now combine tracks that contain MIDI events with digitized audio tracks. In a complete studio environment, the sequencer can play out the digital audio while also routing MIDI streams to tone generators which can create their sounds in real time. A mixer (software or hardware) can then combine all of this audio into stereo tracks. A device which wraps a sequencer, a controller and tone generator(s) all in the same box is sometimes called a workstation.

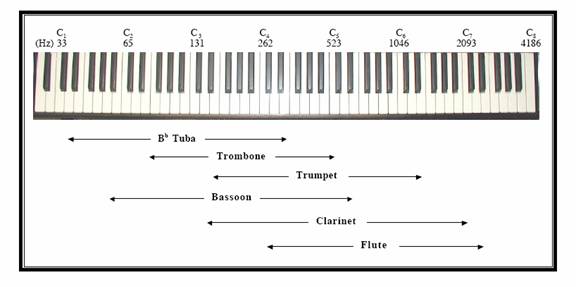

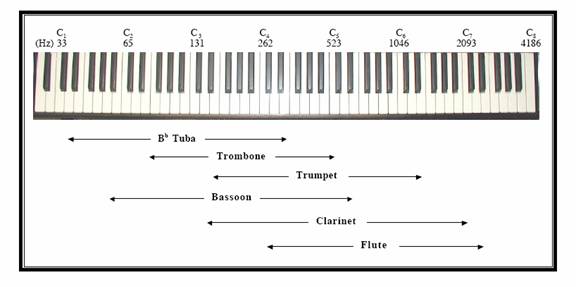

If sound waves have a discernable repeating pattern with a consistent frequency, then we experience a sense of pitch. If the pattern repeats 440 times per second -- also referred to as 440 cycles/second or 440 Hz (Hertz) -- then we experience the pitch as the A above middle C (A440) in Western music scales. The A an octave above A440 has a frequency of 880 Hz; the A an octave below A440 has a frequency of 220 Hz. The range of the human ear is approximately from 20 Hz to 20,000 Hz (20 kHz). Here is a piano keyboard with the frequency ranges of some instruments marked.

The amplitude of the sound waves is what we experience as loudness or volume. The amplitude for many sounds has a characteristic pattern of attack-decay-sustain-release (the ADSR envelope). For example, if you strike a piano key the sound quickly grows (attack), peaks and then starts to fall off (decay), but continues to sustain while the key is still depressed. Finally, the sound rapidly falls off as the key is released.

The type of pattern in each cycle is what we experience as the timbre (TAM-ber) or sound quality of the sound. It is what distinguishes the sound of a flute from the sound of a violin, even when both are playing an A440.

Synthesis algorithms model all of the above qualities of sound when they interpret a MIDI event to produce a sound. The MIDI note number is mapped to a frequency. The velocity scales the overall amplitude of the ADSR envelope. For sustainable sounds, the note OFF event initiates the release portion of the envelope. The actual waveform can be influenced by sampled data from real sounds or can be completely artificial.

Feedback to

feedback@coolwisdom.com.

Copyright 2003 by Mark Jones.

Last updated

January 14, 2004.